What is Antioch?

On account of the chain split and associated errors caused by a bug in the version of Substrate we were using for the runtime of the Babylon network, we have determined that the Babylon testnet is not recoverable.

As a result we will be launching a new network (hopefully by the end of next week) with an upgraded Substrate version and various minor improvements.

Unlike most releases, the changes for Antioch will be very minor from the perspective of regular testnet participants, however, in just over a month we will be releasing the Sumer testnet, which will offer some more exciting changes for the testnet platform.

Migration of Babylon State

The main thing to be aware of for our testnet users is that we plan to migrate most of the data from Babylon directly over to Antioch.

This will include:

- Memberships

- Forum content

- Proposal history

- Balances

Balances will be migrated as of the time of the fork (block #2,528,244) while everything else will be taken from as late as possible before the new chain is launched.

If your balance at height #2,608,346 differs from what you have on the new chain, DM @bwhm or @blrhc in our Discord server and we will make up the difference!

What happened to Babylon?

Timeline

- The runtime upgrade extrinsic (transaction) was done with

sudo.setCode, and included in block#2528244with hash0x02819d141da567a67f1fa0b3e447ea6b64f3a0a4f8fa042049b2721294874c4e- Before the transaction was made, the Polkadot telemetry apparently showed some discrepancies between the nodes.

- It is unclear whether this had any impact on what transpired.

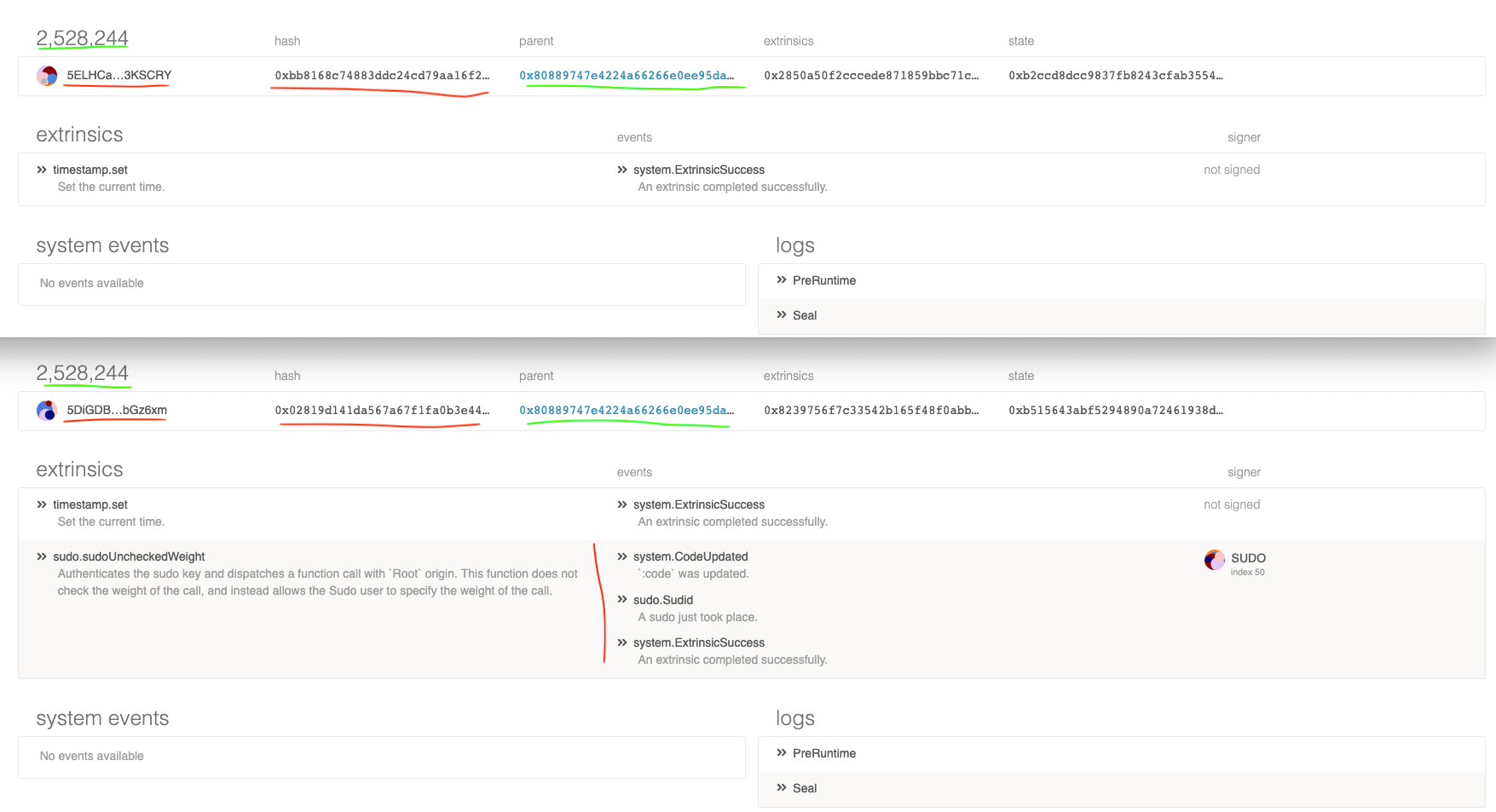

- Two blocks with height

#2528244was produced. (see image below)- All the logs we have seen thus far first saw the one with hash

0x0281...4c4e, but some then had a "reorg" to0xbb81...0e19. - All of these saw a new reorg back to

0x0281...4c4e, meaning all nodes were still on the same chain in terms of accepted blocks.

- All the logs we have seen thus far first saw the one with hash

- However, not all nodes executed the aforementioned

sudo.setCodeextrinsic.- The RPC nodes that Pioneer connects to by default, all appear to have executed the upgrade, thus changing the runtime

specVersionfrom 9 (before the upgrade) to 11 (after executing). Note that "skipping" 10 is not the issue. - A majority of the Validators on the other hand did not execute the code, and were/are still reporting to be running

specVersion9.

- The RPC nodes that Pioneer connects to by default, all appear to have executed the upgrade, thus changing the runtime

- Despite having different consensus rules, both chains accepted the incoming blocks and finalized the new blocks

- After what appeared (at the time) to have been a successful upgrade, a post was made in proposal 212 that the runtime had been upgraded.

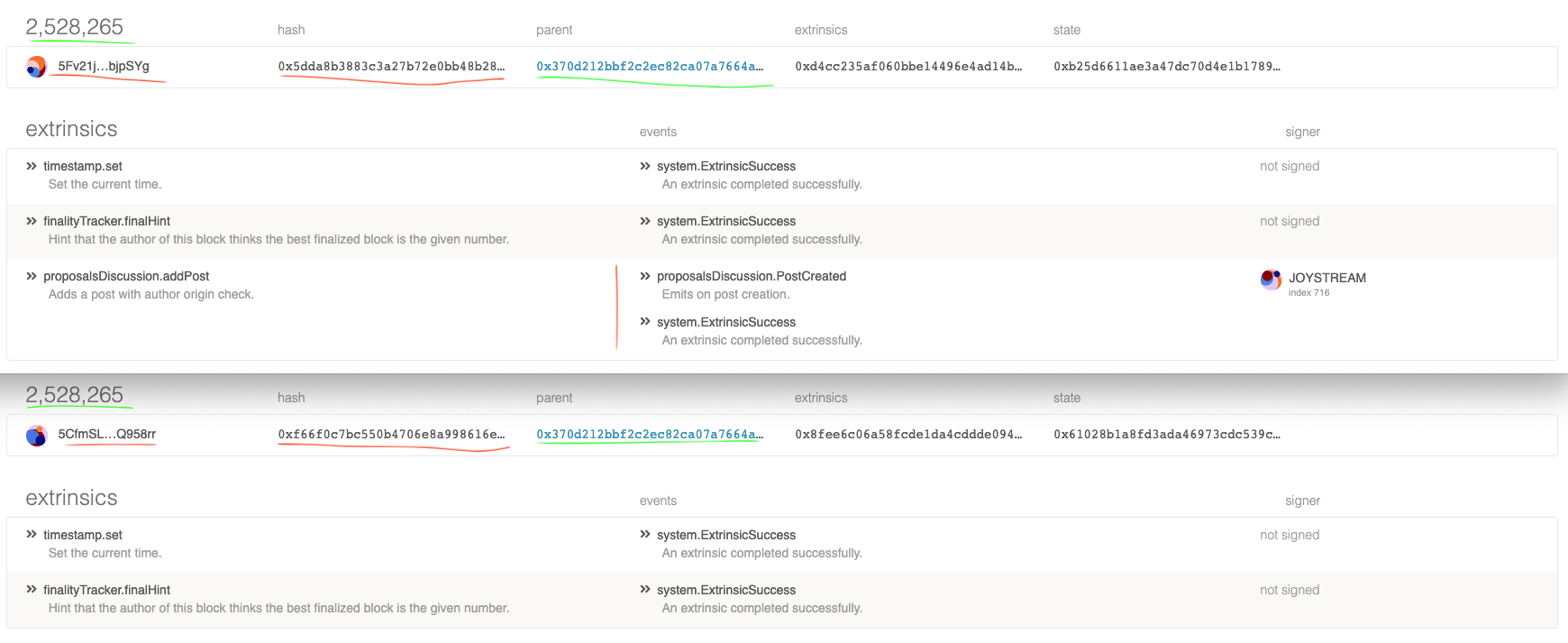

- After submitting the

proposalsDiscussion.addPosttransaction, pioneer (onspecVersion11) returned an error saying "bad signature". The transaction was submitted again, this time successfully. - It appears that the first transaction was accepted by the nodes on

specVersion9, but not for those on 11.

- After submitting the

- At block

#2528265, the chain onspecVersion9 included saidproposalsDiscussion.addPostand the chain split was now a true fork. (see image below)

Aftermath

As soon as the chains was following two different sets of consensus rules, a split was of course imminent, and this exact transaction was just the spark igniting the fire. In fact, we are still not sure why it wasn't immediate.

However, the most interesting aspect is the fact that the prevailing chain:

- included the

sudo.setCodeupgrade transaction - which produced a

system.ExtrinsicSuccessevent - without actually executing the code

New nodes trying to sync up will all execute the code, move to specVersion 11, and will not accept the block prevailing block #2528265. Furthermore, from what we have seen, all nodes that stop and restart will crash at the end of the era.

This made it infeasible to keep the testnet running, even though it appears that most (if not all) nodes that "stayed" on 9 are not experiencing any issues.

Debugging

We believe the cause is related to a known bug on an older version of substrate, loosely described in this issue. Babylon was running on a "commit" of substrate where this was not yet fixed, by the tiny margin of only two weeks...

We have been trying to confirm that this is in fact the exact and only cause of the chain split, but we've been unable to do so. The upgrade "worked" for a minority of the nodes, and had been successfully changed on our staging testnet.

Because of this, we are not only pulling in this particular fix, but have chosen to move to a much newer version of substrate for the release of Antioch.

Disclaimer

All forward looking statements, estimates and commitments found in this blog post should be understood to be highly uncertain, not binding and for which no guarantees of accuracy or reliability can be provided. To the fullest extent permitted by law, in no event shall Joystream, Jsgenesis or our affiliates, or any of our directors, employees, contractors, service providers or agents have any liability whatsoever to any person for any direct or indirect loss, liability, cost, claim, expense or damage of any kind, whether in contract or in tort, including negligence, or otherwise, arising out of or related to the use of all or part of this post, or any links to third party websites.